When I moved to the software industry as a programmer from the petrochemical field, I needed to step up .. and fast , having neither the qualifications nor the experience in programming. I took the obvious route of training myself at home with a home PC. The nerdy dream of setting up my own system and flooding it with the then “hot” tools was too enticing to pass up.

I did what most learners do – popped down to the grey market with my first month’s pay check and blew it on the latest DDR RAMs, Scuzzy hard drives, anti glare / stress free monitors, and what not: Added a couple of S/W CDs – OS , Latest IDEs, enterprise Database S/W ( all from the same grey market to boot) and went home tired but happy. Savings ??? I spent less than 40% of the moolah I would have doles out on a branded desktop and software. Little did I know what I was in for.

It took me close to a day to assemble the stuff ( I had no degree in H/W, come to think of it none in S/W as well), another day or two to get the OS , DB and IDEs up and running to get to my “Hello World”. Viola tout – time to get to the serious stuff now.

It started after a couple of weeks and I experienced this over the year – the DDR Stuff didn’t sit too well in it’s slot – a tap and scrape with a screw driver solved this, the monitor showed lines, the dog chewed up the cables and I could’nt get a “genuine fake” replacement, Windows popped with a message every two minutes that it suspected a fake edition, the database crashed and the installation CD wouldn’t work.. My progress on the “Nerd Goal”.. ….Zilch!! All my efforts were focused on solving the inanities of the Hardware and / or the Software, not so much on the process of writing, rewriting, white box testing and optimizing the lines of code that every programmer must go through. Looking back, maybe it was not all that bad – I was after all trying to supplement my learning and some body hear me – I SAVED 60% -even if I chucked the monster in a year.

I sell enterprise commerce platforms for my daily plate of rice, dal and vegetables ( boiled not fried) and this is a story I share with my prospects when it comes to a discussion on Why chose Enterprise over freeware? I call it the Cost of Free. Take a look at the comparative cost items for an assembled grey market, free ware based desktop vs a branded one which I purchased later on.

Well, seems like the Grey Market won by about twenty grand right?? Wrong !! Think again. I chucked Option A in a year but then bought Option B ( I got a promotion !!) that ran like a charm for the next 4 years. Well, understanding the Cost of free came at a price and a startling realization, that a product could be more pricey than it’s competitor but could end up costing you lesser, not to mention all the frustration and angst that goes sleeping with a product like Option A. As you can see below, Option B won by a mile over a four period, two point five, if you add the copious amount of sweat and toil I was spared.

The story of Enterprise Ecommerce platforms vis a vis Free / low end ware is pretty much similar -only the stakes are way higher as you can imagine. Running an online business is not the equivalent of learning to code and this is a lesson most merchants learn and at an expense. As an online practioner, you own the online revenue and your focus is to acquire, engage, retain customers and generate a predictable revenue stream through the platform - not to scout for odds and ends ( read extensions and modules) to make the platform execute the 101s of classical eCommerce. Think about it – freeware gives you the 3Cs you need for online commerce – Content, Cart and Catalogue capabilities. Enterprise versions come with additional capabilities that accentuate your top line using the 3 Ps - Performance, Personalization & Persistence (Loyalty). I ask again, what are you concerned about “The Price” or “The Cost” ?

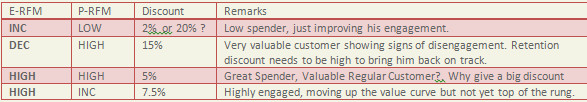

Following

the “Who will buy a computer” example, we can tweak the question to Who

will potentially become a “Highly Engaged- Valuable” Customer or how

customer demographic patterns impact customer behavioral

scores.Following the “Who will buy a computer” example, we can tweak the

question to Who will potentially become a “Highly Engaged- Valuable”

Customer or how customer demographic patterns impact customer behavioral

scores.

Following

the “Who will buy a computer” example, we can tweak the question to Who

will potentially become a “Highly Engaged- Valuable” Customer or how

customer demographic patterns impact customer behavioral

scores.Following the “Who will buy a computer” example, we can tweak the

question to Who will potentially become a “Highly Engaged- Valuable”

Customer or how customer demographic patterns impact customer behavioral

scores.

Clustering

helps make the process and visualization simple and is a standardized

package in most tools. There are different types of clustering like

Partitioning, Hierarchical and Density based techniques though we will

be focused on Partitioning techniques like C4.5 / K means methods. This

technique assumes critical importance for two reasons:

Clustering

helps make the process and visualization simple and is a standardized

package in most tools. There are different types of clustering like

Partitioning, Hierarchical and Density based techniques though we will

be focused on Partitioning techniques like C4.5 / K means methods. This

technique assumes critical importance for two reasons:

The

analysis is for two year in store data of a retail chain depicting

purchase behaviour alone. The kind of personas ( The current analysis

categorizes Loyal,Potential, Nascent etc) and segments you could drive

are virtually endless based on your clustering parameters that fits your

business model and consumers. I will try to bring in all the behaviors

we talked about in earlier posts - Eg "Young, Valuable,Engaged, Heavy

Browsing, Electronic Geek" , "Mid Aged, Potential , Slightly Disengaged,

Stationery Buyer" , so on and so forth. Some level of intelligent

analysis is necessary to arrive at those critical consumer parameters

that drive your business. But We'll never know unless we try, would we?

The

analysis is for two year in store data of a retail chain depicting

purchase behaviour alone. The kind of personas ( The current analysis

categorizes Loyal,Potential, Nascent etc) and segments you could drive

are virtually endless based on your clustering parameters that fits your

business model and consumers. I will try to bring in all the behaviors

we talked about in earlier posts - Eg "Young, Valuable,Engaged, Heavy

Browsing, Electronic Geek" , "Mid Aged, Potential , Slightly Disengaged,

Stationery Buyer" , so on and so forth. Some level of intelligent

analysis is necessary to arrive at those critical consumer parameters

that drive your business. But We'll never know unless we try, would we?

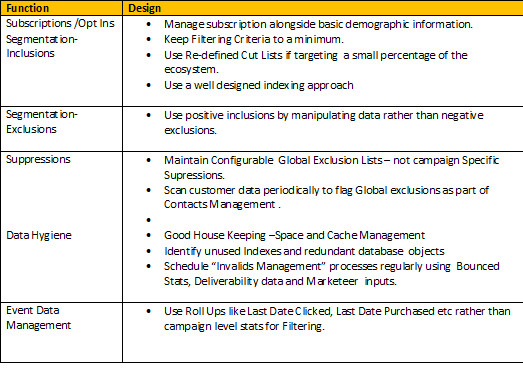

This

kind of “Contention Analysis” is highly useful tool in deciding

operational strategy, System House keeping ,maintenance requirements and

ultimately managing campaign performance

This

kind of “Contention Analysis” is highly useful tool in deciding

operational strategy, System House keeping ,maintenance requirements and

ultimately managing campaign performance